With this series of articles, we aim to discuss in detail the components of Qlik Talend Data Fabric, a data management platform. First of all, we will briefly touch on how Qlik Talend is related to the concept of data. Next, we will focus on data integration, which is the technical content of this post.

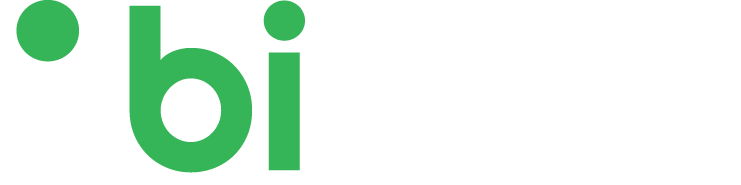

When we consider data management in the context of the following concepts:

- Data Integration

- Data Quality

- Data Governance

- Application Integration and API Management

- Data Catalog

Within the scope of data management needs, licensed, open source or in-house tools should have up-to-date competencies in line with the needs of institutions and the goals and trends that change every year. We can list these requirements under the main headings as follows.

- Centralized management

- End-to-end monitoring of data quality

- Ability to manage software, business and managerial roles through the product

- Self-service applications

- Generative AI support

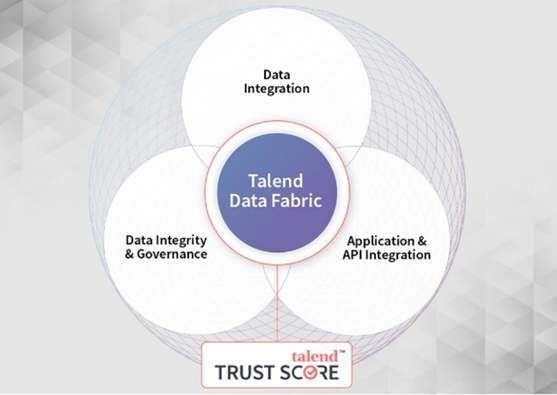

Within the scope of these needs, Qlik Talend offers an end-to-end modular data management platform solution that meets these concepts and changing requirements with the Data Fabric Platform, which has been recognized as a leader by Gartner.

After briefly mentioning Qlik Talend’s data management platform, we can now elaborate on the Data Integration solution. The topics associated with the concept of integration are:

- ETL (Extract, Transform, Load), ELT (Extract, Load, Transform)

- Big Data Management, Batch data processing, Real-time data processing / streaming

- Unstructured files, XML, JSON, etc.

- The need to integrate with data storage systems such as Database, Data Warehouse, Data Lakes and so on (connectivity).

Qlik Talend offers data quality and data ownership services as key components, as well as integration in its solutions, which can be located both in the cloud and on on-premises. Therefore, for Talend, data management is important from the beginning of the data processing process. By creating a self-service working environment, it uses the “Data Preparation” and “Data Stewardship” services, which are modularly available within the platform.

Within the scope of any data integration project, the primary features expected from the data integration tool to be used by business units or software teams are as follows:

- Ability to provide rich connectivity

- Drag-and-drop, no-code/low-code ease of use, flexibility of customizability

- Create and manage Batch and Stream dataflows

- Error and version management

- Metadata and context management

- During the data integration design, data can be previewed within the authorizations at every step through the application.

- Operation tracking, monitoring.

Now, let’s take a closer look at these features and continue with Qlik Talend’s user-friendly client application which is Talend Studio.

1. Components

“Components” are functional units that allow us to create workflows within Talend. With more than 900 components, it is possible to connect to many systems and create workflows specific to those systems. In Talend Studio, new components or component add-ons can be added free of charge with monthly updates.

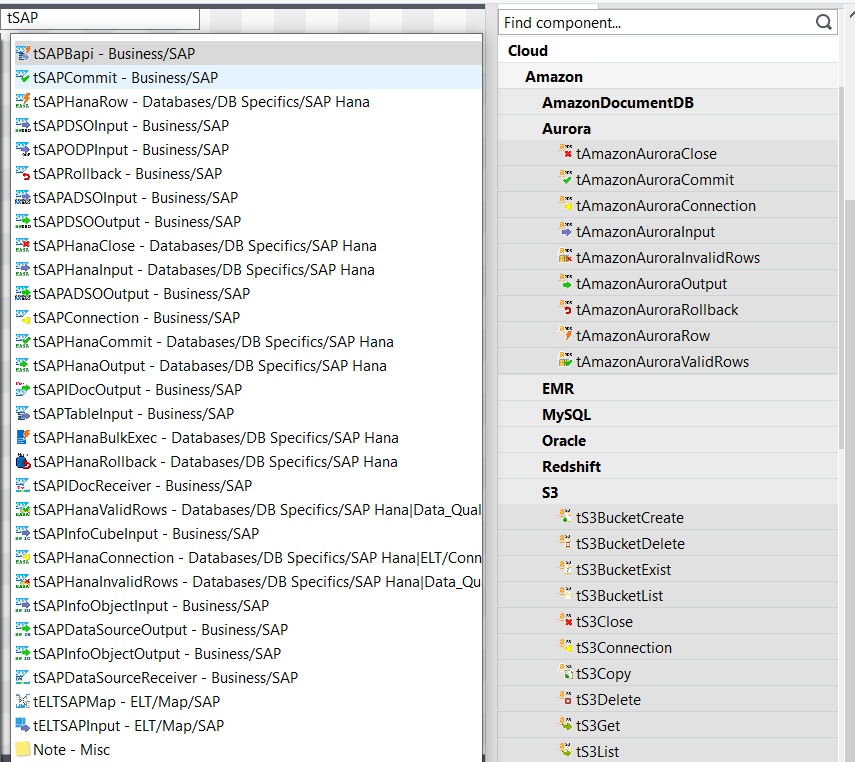

For example, when we filter only SAP components in the image below, we can see that there are “native components” specific to the work to be done.

Likewise, on the right side of the image, when Amazon is filtered from cloud providers, there are components specialized in the database or other systems within Amazon.

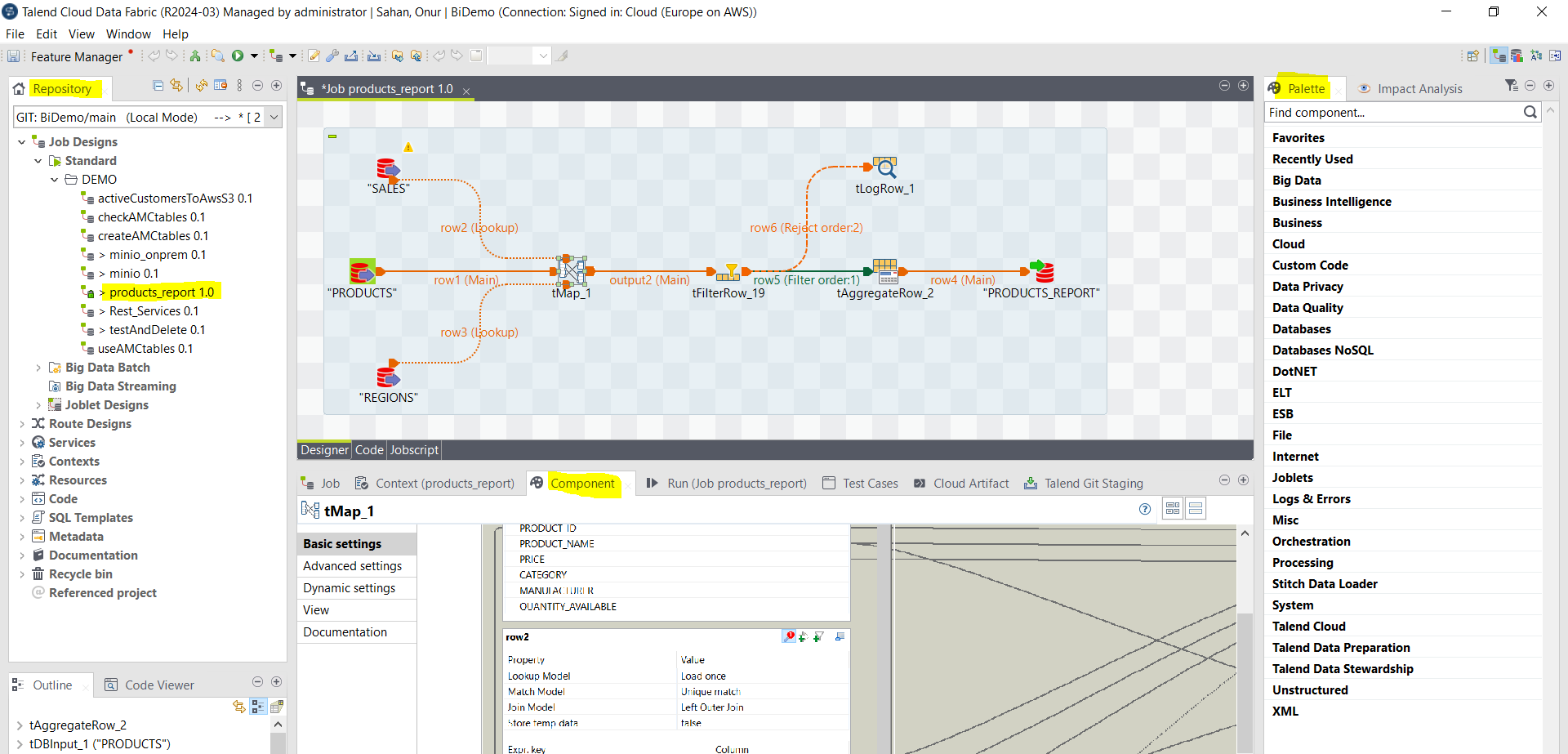

2. Technical Modeling

Components can be combined on the design page with the drag-and-drop feature and workflows can be created. In the image below, there is a workflow created in the Talend Studio tool. In the Palette section on the right, the components are classified according to their functions. On the left, in the “Repository” section, we see the components of the project called “BiDemo” located on Git. In the Designer in the middle, that is, in the business design section, a join, filtering and aggregate steps were applied using the product, sales and region tables and written to a product report database. With the tabs at the bottom, these components and the parameters of the work are determined.

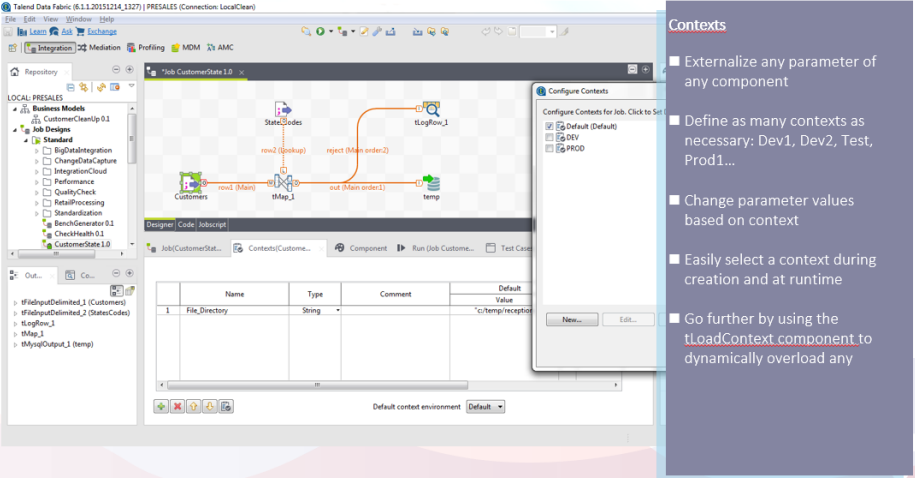

3. Context

The management of the parameters to be used in DEV-TEST-UAT-PROD environments in the software lifecycle can be defined within the job or under the “Repository” section.

Therefore, in a new job we will create, it allows us to access defined contexts quickly and effectively. Likewise, if there is an update to the parameter, it gives the flexibility to apply it either in all jobs or only in the current job. For example, the “File_Directory” parameter in the predefined “Default” environment is used for the following job.

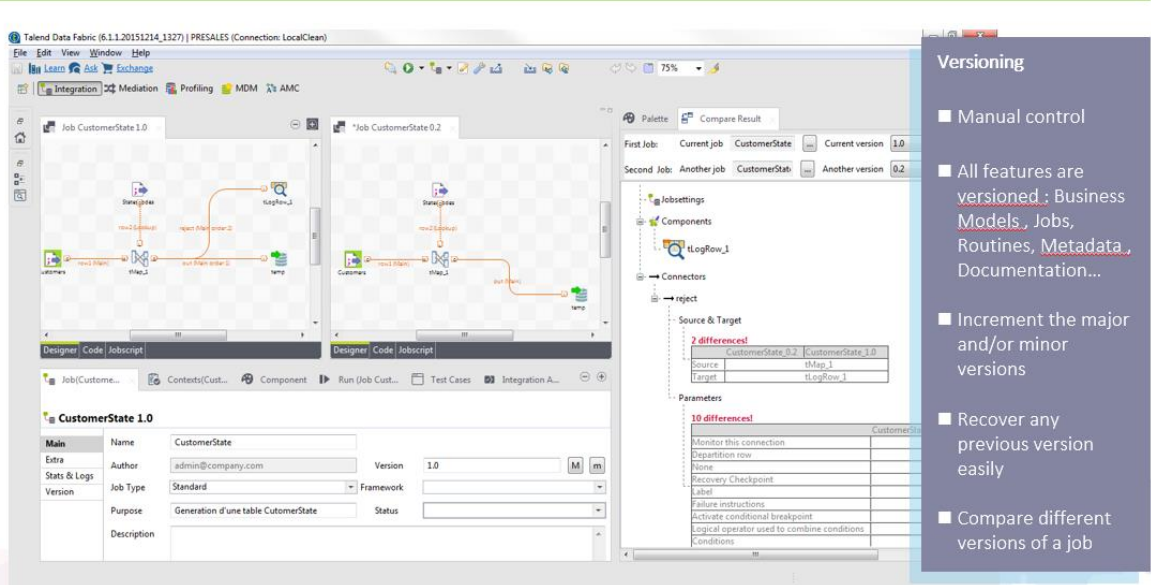

4. Version Management

We can identify and compare different versions of the same work. In the image below, the “Compare Result” feature is shown, where we can compare both the technical modeling and the outputs of two different versions.

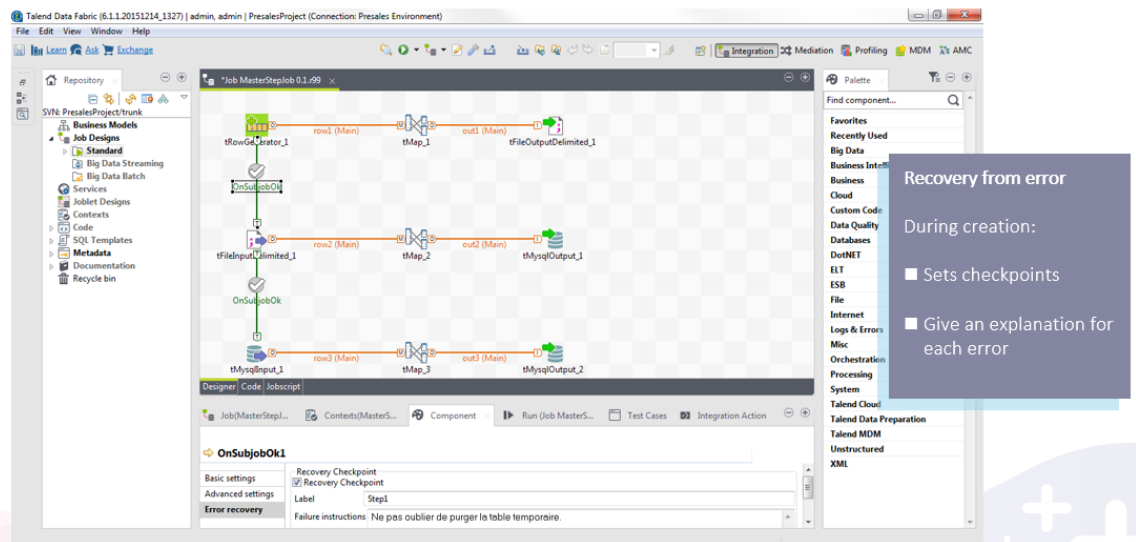

5. Error Management

In order to manage the dependencies between the designed jobs, there are “OnSubjob” links between job groups and “OnComponent” links between component groups. Therefore, the actions to be determined for errors that may occur during the flow are provided through these links. In the example below, if the top job group (Subjob) works correctly, the “OnSubjobOk” link is used, which allows the other job group to be started. In addition, a “Recovery Checkpoint” has been created on this link against a possible error.

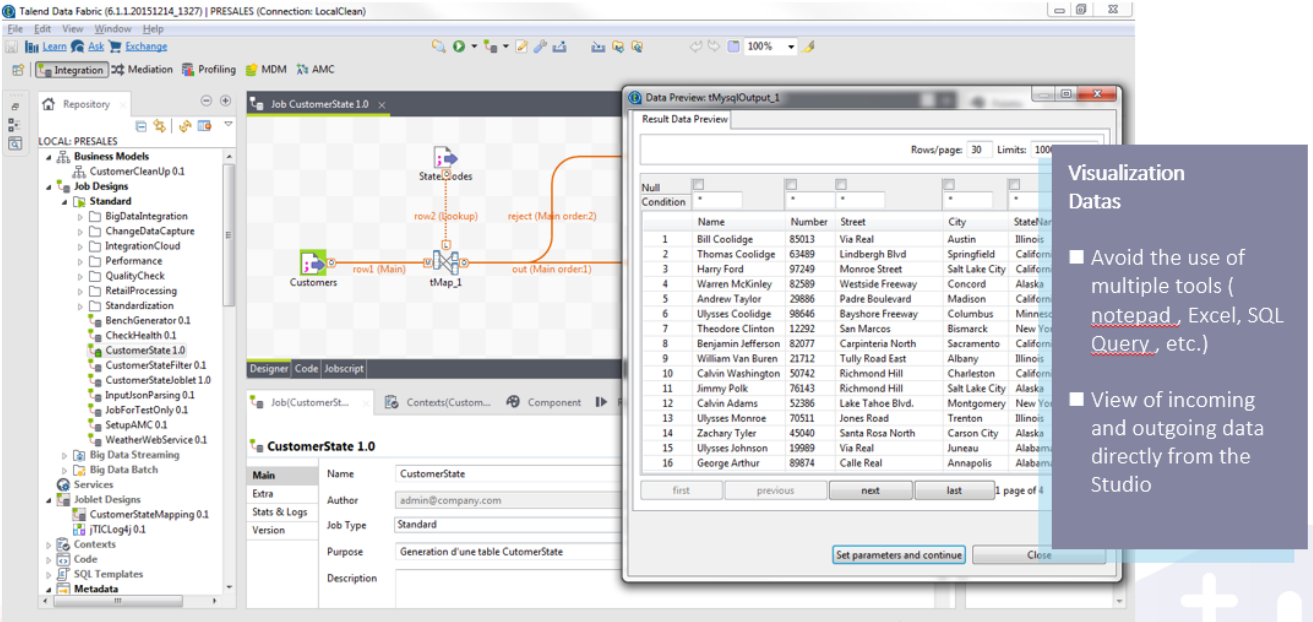

6. Data Preview

One of the factors that increase the workload in a data processing tool is that you have to go to another editor to see the data. With its Data Preview feature, Talend Studio allows you to see the data without leaving the workflow.

In the example below, the “Customers” table in the Mysql database is used as an input in the job. And by staying in the Studio, we can see the data before running the job with Data Preview.

7. Impact Analysis

It can be seen in which jobs the tables or parameters are used and which systems they feed. Thus, when a change is made to a table, it can be tracked what it can affect. In the example below, the analysis of which jobs the “Customers” table is used in and which tables it feeds is extracted on the right side of the visual.

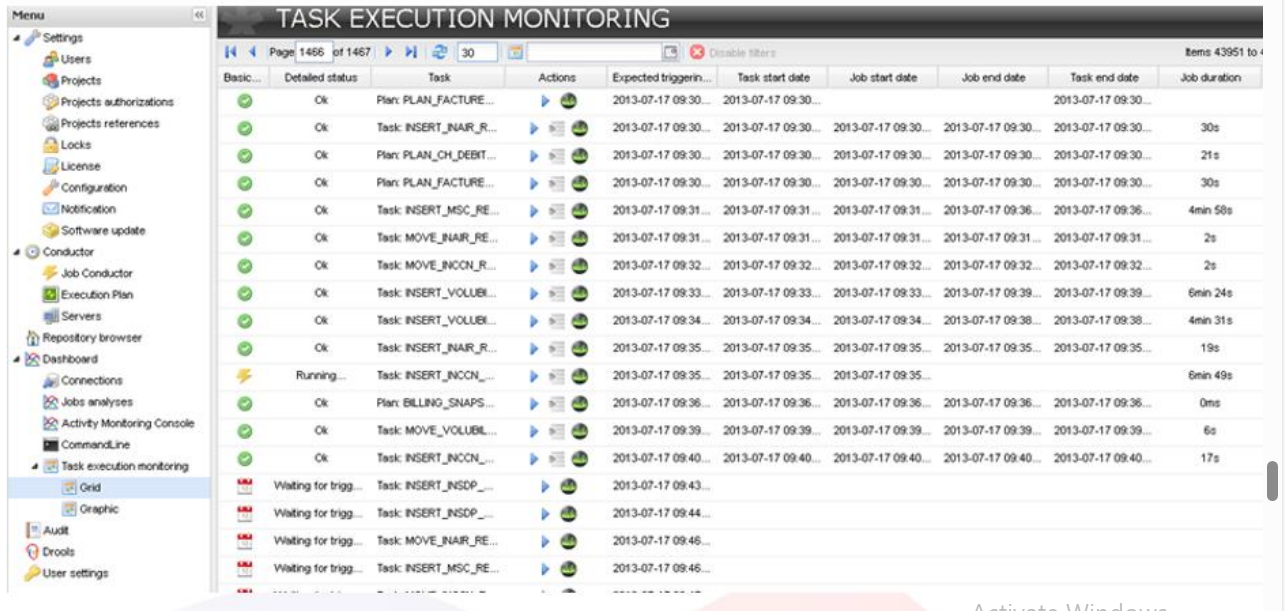

8. Real-Time Operations Monitoring

In order to monitor the workflows in real time and comprehensively, we can use the AMC (Activity Monitoring Console) screens in the Studio and observe the work tracking processes using the Talend Admin Center screens.

In the image below, there is a monitoring screen where we can see the status, working hours and durations of all works.

In the above topics, we have discussed data integration, which is one of the most important components of data management, as one of the main features of Qlik Talend.

Within the scope of integration designs, Talend’s eclipse-based client, Studio, is used, where we can connect to existing and new systems with corporate-specific components, and processes can be easily managed within the software lifecycle of workflows. End-to-end operations can be carried out with the perspectives of Integration, Quality, Mapper and Monitoring within the scope of Studio competencies. As Self Service, we can use Data Preparation and Data Stewardship services in the cloud and “onpremise”.

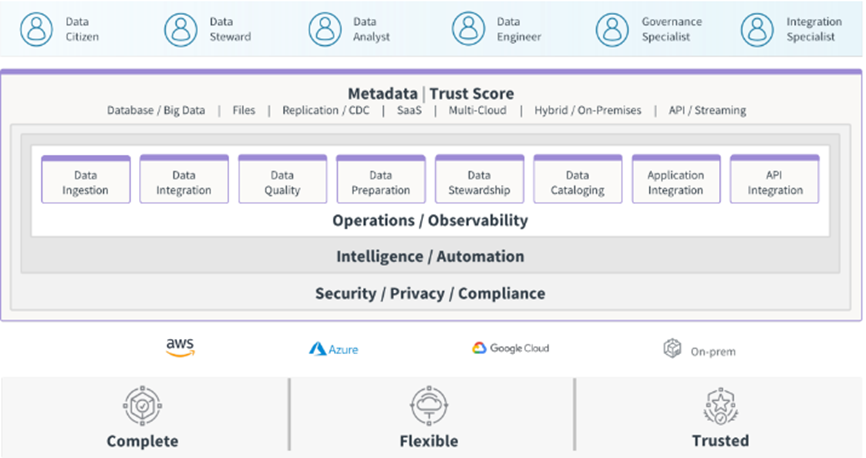

With the Talend TrustScore feature, it is possible to measure the quality metrics of the data at every stage, to design the improvement processes with the participation of different teams, to monitor the results and to maximize the health of the data throughout the organization.

As a result, Qlik Talend provides us with a centralized data management tool that we can do together with integration, operation, administrative and data quality topics, whether as a software developer or a business user. It also supports integration with self-service services and enables data rules to be applied quickly in intermediate layers or during workflow.

For your questions about Talend Data Fabric (Data Management Platform), you can contact us via our linkedin BiTechnology page or info@bitechnology.com e-mail address.